The earliest known deepfake video surfaced in 2017 and since then, they have become increasingly common, especially with the increased adoption of AI technology. While deepfakes can be used for harmless entertainment, like putting Sylvester Stalone in the role of Kevin from Home Alone, research has shown that deepfake-related identity fraud cases have skyrocketed in recent years, rising by a whopping 3,000% in the US from 2022 to 2023.

Learning about deepfake technology and how it can be detected is essential for businesses to protect their customers and assets.

First things first – what is a deepfake?

A deepfake is an image, audio, or video generated by a specific kind of machine learning. According to the University of Virginia, two algorithms are used to create deepfakes. One algorithm is fed examples to create the best possible replicas of the images or videos. The other algorithm is trained to detect fake images or videos. These two algorithms alternate outputs, improving both algorithms and allowing the ultimate image or video to be extremely realistic.

In the past, similar types of fraud would have had to be done through manual altering processes, like photoshopping and video editing. These processes were often time-consuming and often still easy to identify as fake. Because deepfakes are generated using AI specifically trained for realistic outputs, it is much more difficult to detect that a deepfake is fraudulent.

How are deepfakes used to commit fraud?

Deepfake fraud is committed by impersonating real individuals or creating completely new identities, most often through voice and video. 37% of businesses globally have reportedly experienced deepfake voice fraud and 29% have experienced video deepfake fraud. The financial sector is a primary target for deepfake fraud, with about half of companies in the industry recently experiencing this type of fraudulent activity.

Account openings

Deepfake fraud is used to open new accounts by creating a synthetic identity – combining real and false information to create a unique identity to commit crimes. While synthetic identity fraud has been a threat since before deepfakes came about, deepfake technology has been able to create more convincing false identities to circumvent security measures. These identities are often used to open bank accounts, credit cards, or take out loans.

Account takeovers

Many accounts rely on biometric identity verification for access, such as a face ID. Perpetrators of deepfake fraud can use the technology to mimic this biometric data to gain account access. Once the fraudster has access to the account, they can not only access anything that was previously protected but can also change contact information and passwords to ensure the account owner is locked out for an extended period. This is becoming an extremely popular form of fraud, with deepfake “face swap” attacks on remote identity verification increasing by 704% in 2023, according to SC Media

Phishing and impersonation

Phishing and impersonation are some of the most widely publicized forms of deepfake fraud, due to both their wide range of implementations and their success in successfully committing fraud. Deepfake impersonations are often used on an individual scale through phone calls where the criminal uses AI to impersonate the voice of a friend or family member to trick someone into giving them money, usually through creating a fictional emergency situation. On a larger scale, deepfake fraud has been used to steal millions of dollars from companies around the world. In February 2024, an elaborate scam was used to trick a finance worker into paying scammers $25 million. Deepfake technology was used on a video call to impersonate the company’s chief financial officer, as well as several other employees.

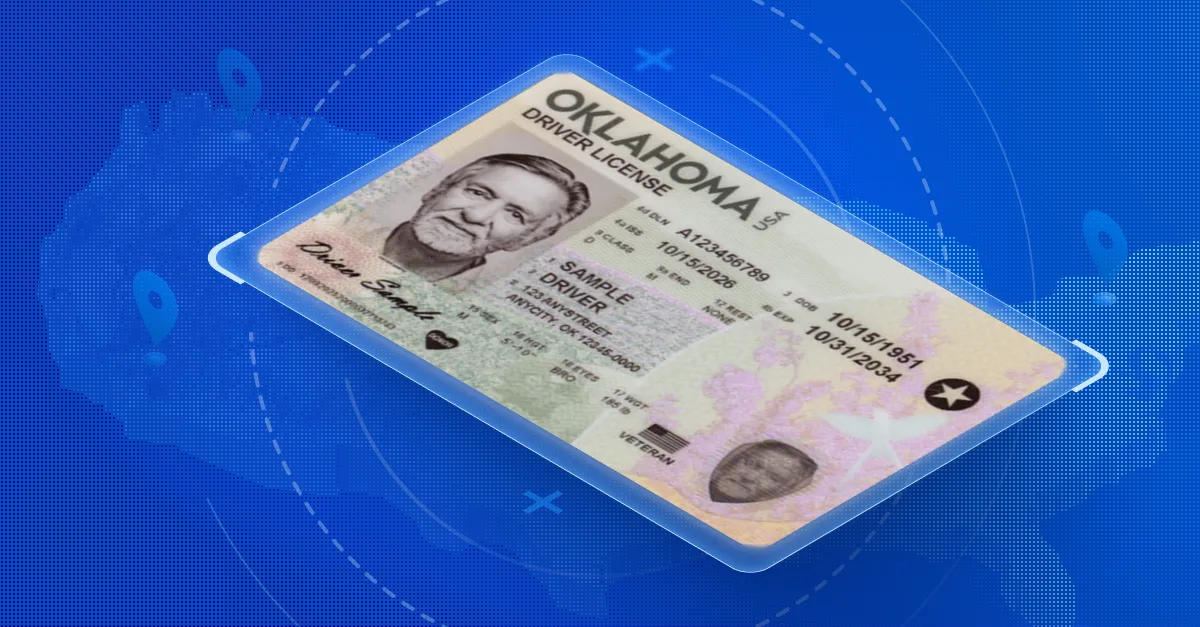

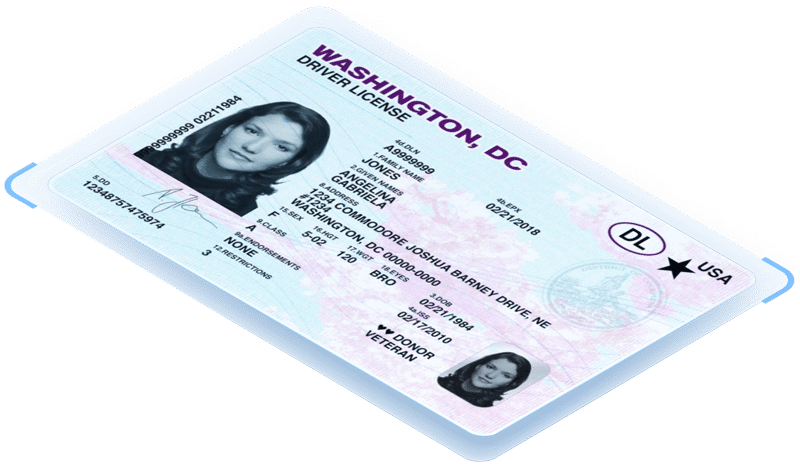

Deepfake fake IDs

Deepfake technology is being used to make more realistic fraudulent IDs. These fake IDs either use a completely fabricated identity or stolen personal information from a real person to create documents that are much less clearly fraudulent than the run-of-the-mill fake ID used to access a college bar. In these cases, ID authentication, a process that combines Adaptive AI software and specialty hardware capable of multi-light scanning, is needed to detect these IDs so that they cannot be used for criminal activity.

More traditional ID fraud is also used by criminals who perpetrate deepfake fraud. These scammers who used deepfake impersonation fraud to steal $25 million, were also found with eight stolen identity cards once apprehended – all of which had been reported as lost by their owners. These IDs were used to make 90 fraudulent loan applications and 54 fraudulent bank account registrations within a three-month span. Deepfake IDs are a significant threat to businesses, especially those that rely only on front/back images of the ID, or perform only light validation on images of IDs.

Why is deepfake fraud becoming more prevalent?

Old fraud tactics aren’t working anymore

While fraudsters aren’t any less desperate to commit fraud, users and businesses are getting smarter. Businesses have implemented security measures to make common types of fraud, like email and SMS phishing, more difficult. Individuals are also more aware of older fraud tactics as cybersecurity training has become standard at most workplaces and media outlets routinely report on these topics. This has caused fraudsters to create new methods of duping people.

Increased use of digital identity verification

More companies are relying on digital identity verification, and while this has opened up access and improved user experiences in many cases, it has also opened up opportunities for fraud. As banking, healthcare, government programs, and educational institutions increasingly rely on biometric identity verification, like a face ID, they must take measures to fight deepfake fraud.

Deepfake technology becoming more accessible

Along with other forms of AI, deepfake generation is quickly becoming accessible, with deepfake-generating apps and websites cropping up for public use. Deepfake fraud is not just for the technically skilled anymore, average fraudsters are increasingly able to get their hands on easy-to-use tools, often for no cost. The lower the barrier to entry gets, the more we will see this technology being used.

What can be done to prevent deepfake fraud?

While there may be some, poorly executed, deepfakes that can be easily detected, the vast majority are very difficult to spot, making fraud prevention tools more essential than ever.

Multifactor authentication

Multifactor authentication is a common first step in fraud prevention. Most online services already employ this tool, which requires an additional verification method to an account password in order to gain access, often in the form of a code sent to the phone number or email of the account holder. While this is still an essential fraud prevention tool, its widespread use has led to fraudsters finding weaknesses to exploit.

Utilizing AI

It may seem counterintuitive that the technology used to create deepfakes could be a solution for detecting it, but because AI has so many capabilities, it is often the most reliable way to prevent deepfake fraud. As the technology to create deepfakes improves, so does the technology to safeguard against it.

AI and machine learning fraud prevention tools are trained in anomaly detection and pattern recognition, meaning they can efficiently spot signs of fraud that may otherwise go unnoticed. These tools are also capable of continuously learning when updated with new datasets to improve fraud detection and catch new signs of fraud as they emerge. Older forms of fraud detection that rely on humans are exponentially less effective and slower to evolve when compared to AI fraud detection methods.

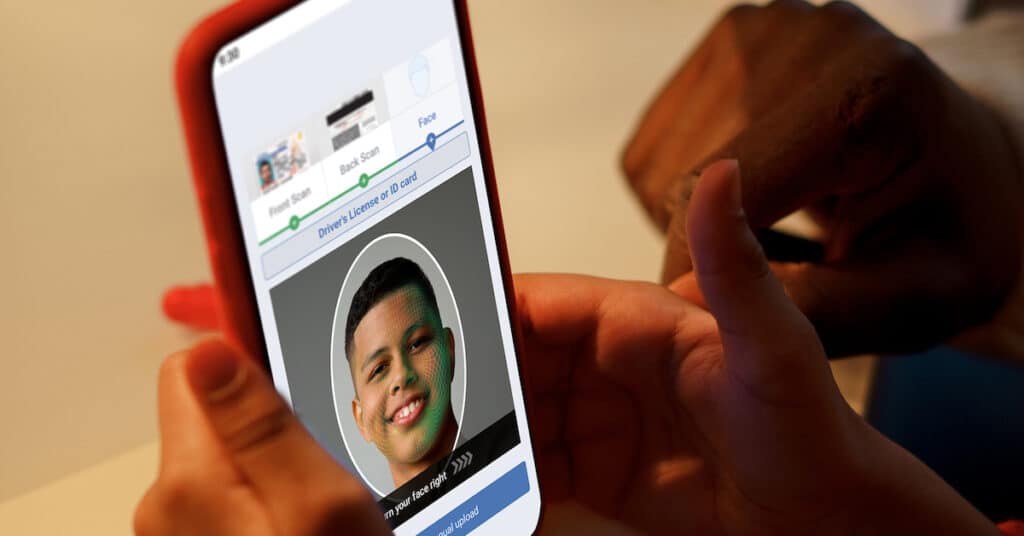

Liveness detection and anti-spoofing

Many businesses have begun implementing facial matching technology as digital identity verification has become more common. This allows customers to open accounts or access sensitive data digitally but can leave businesses vulnerable to some of the most common uses of deepfake fraud if the solution does not also employ liveness detection and anti-spoofing measures.

While facial matching checks to see if biometric data, generally the user’s facial features, matches the identity on file or provided in the form of an ID, liveness detection uses algorithms to determine if the face being presented belongs to a live person as opposed to a picture or video. Anti-spoofing measures add an extra layer of security – since more sophisticated deepfake technology is able to mimic some of the basic liveness checks, anti-spoofing technology aids in determining if the user is a living person or a fraudulent representation.

Businesses that rely on facial matching technology, especially those in high-risk industries like banking, must use software that includes liveness detection and anti-spoofing measures to defend against deepfake fraud.

Document liveness is also growing in necessity, as it eliminates the ability for fraudsters to use images of IDs (including AI-generated fake ID images) in place of a physical document they have in their possession.

Third party database verification

Third party identity checks compare provided information against third-party databases, such as USPS address records, DMV databases, and Social Security Administration records. While third party checks have many important implementations, verifying information against a variety of databases can add a layer of identity proofing and is especially helpful in preventing deepfake synthetic identity fraud.

Regulatory action

There is currently no federal law that bans deepfakes. However, the FTC is attempting to modify an existing ban to include deepfakes for the use of impersonating businesses or government agencies. While the threat of fines or other penalties may not deter all potential criminal use of this technology, it may help to slow the current extreme growth trends.

Staying informed of risks and trends

Businesses at risk of deepfake fraud should educate their employees and customers on how to detect and prevent this type of fraud. They should also stay informed about deepfake fraud trends in order to catch criminals early. UK-based Sumsub published research showing that the majority of deepfakes are coming from China, Brazil, and Pakistan.

Knowing more about this type of fraud and where it may originate from can help businesses keep their customers and assets safe. If you are interested in learning more about how Adaptive AI ID authentication can help keep your business safe, reach out to one of our identity experts.